Alright guys! This has been several years in the works. Sorry for the delay (and the rambling that ensues in this post). Hope it helps!

A very common question @ CCDC events are “What tools did you use?” and “How did you hack us?”

I do use a number of off the shelf tools such as metasploit and nmap, but a good part of my work during the competition is done with custom tools. This is true for a number of us on the National Red Team, with each of us having particularly effective toolboxes. Every year, we take this further and further down the rabbit hole and the toolchain used this year across all red teamers was by far the most custom and effective. With the exception of a few collaborative efforts, I’m going to withhold speaking about other red teamer’s tools and attempt to focus on mine. But rest assured, what you see here is simply a glimpse into the arsenal you meet in San Antonio.

This has not been an easy post to write. The last few years I’ve been a big cagey about discussing these custom tools publicly. Unlike professional adversaries, we have to do much of this work in our spare time. CCDC is ultimately an educational experience, so I absolutely want to share as much as possible. My devil’s advocate position has been that the experience is part of the competition, and if I spill the beans on our tools, we won’t be able to provide the realistic threat you’ve come to expect.

It’s definitely a gray area, but I’m going to go ahead and talk about the tools I’ve spent the last few years working on. The toolbox gets divided into two categories: infrastructure and malware.

Keep in mind as we dive in, that I’ve only red teamed Western Regional and Nationals, with Pacific Rim last year being the exception. If you’ve never competed in any of those, then you likely have never seen these. I’m going to work this year on getting some parts of this tool chain distributed out to the regionals. Even if you have done those competitions, you likely haven’t seen everything in this list either. With CCDC environments vastly different from scenario to scenario, tool reliability is one of our biggest challenges. This makes making tools work a per competition mission. I can only prep so much, so they end up getting deployed in order of their importance. Also, I’ve intentionally withheld some details. I’ll leave those up to you guys to figure out 🙂

A Brief Note on Codenames

Anyone who’s worked with me knows that I love a good codename. We do a lot of R&D and codenames help keep projects in their lane. Sometimes the codename makes sense; less so for others. What you can expect is the following tools can be identified by CODENAME because they will be represented in ALLCAPS.

Also, I’ve attached random pictures from the inter webs to make this long, arduous post more entertaining.

So without further ado!

Infrastructure

Infrastructure tools are things that make us more efficient as a Red Team. These pieces of software run on our servers, never on blue team hosts. If you were at Nationals this year and saw the “Red Team Infrastructure” slide in Dave Cowen’s debrief, this is what he’s talking about.

This year, I migrated every infrastructure component to a service oriented architecture. With the exception of TRAPHOUSE, everything else follows SOA principles with HTTP based API’s for inter process communication. This allows these services to integrate with each other in ways that weren’t possible before, as well as deal with problems of scale more effectively.

TRAPHOUSE

This is where the fun begins. TRAPHOUSE is the main brain for the red team. TRAPHOUSE has three main components:

- Web UI

- Backend

- API

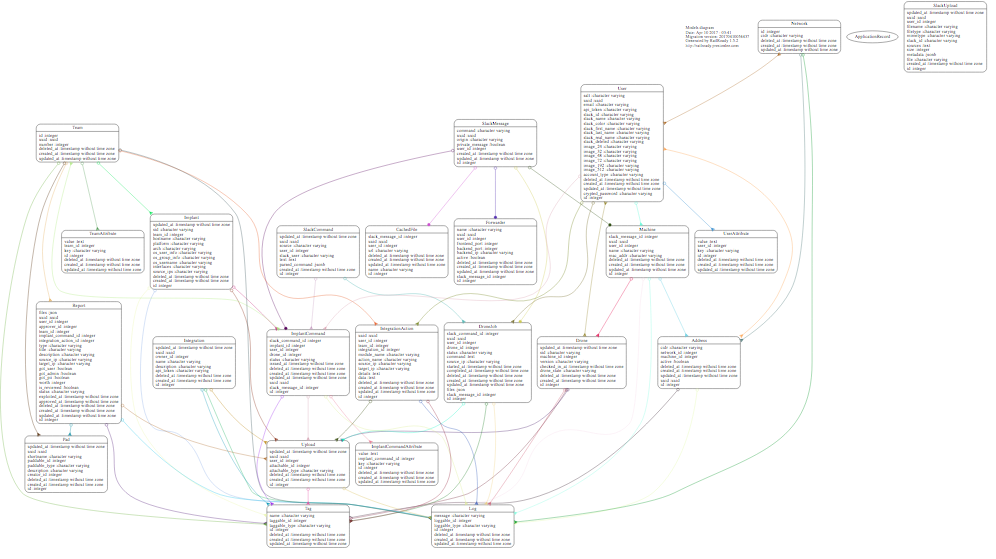

TRAPHOUSE maintains source of truth state for most pieces of our infrastructure. We do a lot of things with TRAPHOUSE, but most importantly, we file our compromise reports there. Black Team has access and manages Red Team scoring based on the data in TRAPHOUSE. To give you a peek into its “brain”, here’s a screenshot of the entity-relation diagram for the PostgreSQL database behind it:

Another key feature of TRAPHOUSE is its Pads. These are basically Etherpads within TRAPHOUSE that let us collaboratively share and store information between red teamers.

TRAPHOUSE has evolved over several years and is one of my oldest tools. It was the product of a marathon hacking session and until this year, was basically duct tape over duct tape. This year, it got a complete re-write with the help of some awesome contributors (Email me if you’re looking to hire Rails devs! They’re great!):

- Reuben Brandt

- Jonathon Nordquist

- Jeremy Oltean

Together, we were able to take the old crappy code and make it far more modular and extensible. There will be more work to come on TRAPHOUSE, but it’s in a great place to continue to be a centerpiece of our red team activities.

The next big feature I’ll be shipping in TRAPHOUSE is a C2 API. The goal is that other red teamers can integrate their C2s into TRAPHOUSE for other people to interface with and use.

OG

While a lot of stuff happens in TRAPHOUSE, the other interface into my tool chain is a Slack bot called OG. OG has extremely tight integration with TRAPHOUSE and is the main interface for most red teamers. You interact with OG with “!” commands, and OG is channel and user aware. You can invite him to rooms, private message him, etc. and he will always respond where appropriate.

OG also manages authentication and accounts for TRAPHOUSE. You register for TRAPHOUSE through OG, and OG won’t talk to you unless you’ve got a valid TRAPHOUSE account.

With inspiration from the “ChatOps” movement, I’ve attempted to make all my tools (especially the infrastructure) available to the rest of the red team via OG commands.

DOOBY

DOOBY might have been the most challenging piece of code I’ve worked on. ever. DOOBY is a custom DHCP Server that I wrote specifically for CCDC Red Teams. The solutions it solved over traditional DHCP servers:

- It’s hard to manage the IPs you use as a Red Teamer in a CCDC environment.

- Humans are not as good as computers at computing random numbers.

- If two red teamers collide IPs, we lose time, and potentially shells.

As Blue Teams have gotten better with regard to network defenses, we’ve had to evolve too. It has taken a few years for DOOBY to be realized. DHCP is an incredibly complex protocol when you get into the nitty gritty, and implementing it from the ground up took countless hours of testing to get it stable.

DOOBY fixes some major red team pain points in several ways:

- Records all IP leasing in TRAPHOUSE and ties it to the Red Teamer for correlation w/ their compromise reports

- Distributes leases across several red team networks

- Allows IPs to be rotated very rapidly without fear of stomping someone else’s IP

In addition to red teamer laptops and VMs, DOOBY manages addressing for my other infrastructure components as well. As you read on, keep that in mind. DOOBY is perhaps the least sexy, but one of the most integral pieces of our infrastructure besides TRAPHOUSE.

DOOBY got its first prime time action this year, but I’m looking forward to iterating on it. There’s still work to be done to make it more user friendly for other Red Teamers, but it supported much of this infrastructure during Nationals this year.

BORG

BORG was born a few years ago in the wee hours of the morning before Western Regionals. I wanted some way to do MSF exploits from random IP addresses but have second stages be centrally managed, and since I’m lazy – let’s integrate it with Slack!

What we ended up with when the sun came up was a beast. BORG is a fleet of “Drone” VMs that do our bidding. We use BORG for all sorts of things – scans, exploit launching, and spraying. The Drone VM itself has several valuable tools preloaded on it:

- metasploit

- crackmapexec

- spraywmi

- nmap

- sqlmap

- database tools

- snmp tools

- phantomjs

- + many more

TRAPHOUSE manages a work queue and the Drones continuously check in seeing if any work needs to be done. Now, when a red teamer wants something done, they simply issue a command in slack:

!borg run nmap -sS -T4 -Pn -oA team_6_quick 10.6.6.2-254

This queues the NMap scan job up. When a free worker retrieves it from the queue, it’ll hop IPs (using DOOBY), perform the task, and return all output (STDOUT and any new files) to TRAPHOUSE. OG then sends a notification to the Slack user who submitted the job with download links to their files.

GRID

GRID is one of those tools when you explain it to people, they give you a funny look somewhere in between “u wot m8?” and “i cant even”. Together, BORG and GRID act as a yin and yang as our red team “bot net”. Where BORG “throws” things from a distributed block of IPs, GRID “catches”.

In one sentence, GRID is a piece of physical hardware which acts as tens of thousands of infected hosts on the network. These “hosts” can proxy call backs and serve malicious files.

GRID has been an incredible piece of technology for us. With GRID effectively acting as a multi-tier, distributed, C2, red teamers can host their implant C2s on static IPs provided by DOOBY. With GRID, they never have to change that IP, and you as the blue team never have direct contact. The C2 remains effectively hidden in the tens of thousands of IPs within GRID’s network.

This abomination of CCDC technology actually has several components that make this possible, so I’ll outline them below.

GRID-KERNEL

GRID distributes itself on the network by creating tens of thousands of virtualized network interfaces. This requires a custom Linux kernel that we’ve named the “GRID-KERNEL”. Getting this stable was one of the hardest parts of GRID due to extremely slow development loop needed to test this.

GRID-CONFIG

The next step of the stack is configuration utility. This tool is what GRID uses to bootstrap its network interfaces. It talks over the SOA backbone to DOOBY and TRAPHOUSE to get a block of permanent addresses for the competition (one for each of its roughly 50,000 interfaces).

These addresses are then blacklisted in DOOBY from being leased. TRAPHOUSE also holds a record of them for black team correlation and for other things we’ll talk about later.

GRID-PROXY

The next major component of GRID is its layer 4 proxy. From within Slack, a red teamer can “reserve” frontend ports in GRID and have them reverse proxy back to them:

!grid forward --frontend_port 1337 --backend_ip 10.0.0.123 --backend_port 4444

This command will now listen on port 1337 across GRID’s network interfaces and forward the traffic to 10.0.0.123:4444. Now when a red teamer needs to know a call back IP:

!grid ip

OG will grab a random IP from GRID’s IP table in TRAPHOUSE and return it to Slack. The !grid command in Slack is extensive with several more capabilities. It is completely self service for the red team and supports both TCP and UDP.

GRID-CDN

There are a few ports that are reserved in GRIDs proxy. Two of them are 80 and 443. These ports actually get reversed proxied to GRID’s CDN service. The CDN service acts as a layer 7 router sending traffic matching rules to pre-defined places. As of now, this is defined by me at game time, but next year I’ll integrate this into OG for layer 7 HTTP self service.

If none of the routes are matched though, GRID then attempts a lookup against a local DB for a matching URI. If the URI is known, the CDN will lookup the corresponding file in its CDN Origin and serve it.

This allows us to “push” files to GRID and retrieve them in a distributed fashion. The file object can be referenced across many of my tools for interpolation with its GRID file ID. An example of this might look like:

!grid host --url https://my.super/leet.malware --name dabomb

OG will push a CDN cache job to TRAPHOUSE, which will make an SOA call to GRID’s API. GRID will download the file at the specified –url, hash it, and keep a reference using the –name field. It will generate a shortcode for you and pass it back to TRAPHOUSE, which OG will pick up and respond to you with the following:

http://[RANDOM_GRID_IP]/[RANDOM_SHORTCODE]

That file can now be retrieved from anywhere in the CCDC network using that URL. If Dan wants a different URL, he can simply issue the command:

!grid link --name dabomb

and he will get a new URL with a different IP and different short code. A number of options exist too, such as DNS hostnames, ports, # of downloads you want the file to be available for, etc.

GRID’s CDN gives us totally ephemeral staging of binaries and payloads. When a stage 2 needs to be retrieved on an infected host, it can pull from an IP address that you’ve never seen, and likely won’t again.

Over the last few years, blue teams have become more and more effective at network defenses.

While the CCDC scenario is realistic in many ways, one of the ways blue teams have found ways to gain an advantage is in monitoring network traffic. BORG and GRID give us the capability to completely negate this tactic. I’ve yet to see a blue team effectively defend against this.

GRID-CRYPTO

The final component of GRID deals with how GRID manages cryptographic material. TCP443 is a reserved port on GRID and serves a wide variety of HTTP virtual hosts on it. GRID this year was resolvable by 67 different domains that I own, each with unique and interesting subdomains. It was too much work to manually get HTTPS certificates for all of them, so I wrote a piece of code to use AWS Route53 and and EC2 instance to automatically generate valid SSL certificates for all domains and their subdomains. This tool would then spit out a configuration file for GRID that it could then use to serve valid HTTPS on requests that matched the correct Host parameter.

THEFOOT

In order for GRID to get DNS resolution, we needed a way to dynamically serve GRID IPs via DNS. We originally ran a complicated chain of BIND servers, but overnight at Western Regionals this year, I wrote THEFOOT. THEFOOT is a custom DNS server that very simply serves a random GRID IP for any valid GRID domain. It retrieves the DNS zones at startup from TRAPHOUSE and responds to any recursive query for those domains, so we set the NS records of all our domains at THEFOOT’s IP during the competition and any resolver would return competition IPs to you.

RTDNS

RTDNS is a separate DNS server that red teamers can choose to point to when using DOOBY. In many CCDC environments, every team has the same FQDN “foo.ccdc” for example. This means that to resolve “http://shop.foo.ccdc” in the browser, we have to point to your DNS server.

RTDNS solves this by letting a red teamer “lock” their resolution for a domain to a specific upstream resolver. This allows them to stick to their team’s DNS for HTTP/S resolution.

In previous years, we’ve actually run individual RTDNS servers for each team in Docker containers, but by 2018, RTDNS will be its own stand alone singular application with Slack Integration.

MRWIZARD

MRWIZARD is a distributed scanner made by Lucas Morris. MRWIZARD continuously runs a variety of port and service discovery scans against all the teams from a giant block of IPs (similar to how GRID manages its IPs).

MRWIZARD then uploads its scans to TRAPHOUSE for red teamers to grab when they need it. It also watches services and when new services are seen, it alerts TRAPHOUSE to this wonderful new discovery.

CRACKLORD

This is another Lucas Morris and Crow creation. CRACKLORD is an on demand hash cracking system. Maus used his DevOps magic and worked closely with Lucas to get a CRACKLOARD autoscaling EC2 version deployed for this year. The more hashes you want to give us, the more we’ll crack!!!

Currently in progress is CRACKLORD integration with OG and TRAPHOUSE so that cracking jobs can be scheduled directly from Slack as well as hash lookups from previously cracked hashes.

FOXCONN

This was something Maus and I dreamed up years ago that has fallen a bit stale as of late, but is something we’ve used successfully. FOXCONN basically acts as an MSFVENOM server that generates MSFVENOM payloads over an HTTP API. It even has the ability to generate a payload based on request user-agent, so that whenever you get on a box, you can just hit the FOXCONN API and you’ll get returned a payload for that platform.

Now that we’ve re-architected TRAPHOUSE and OG, FOXCONN is up next for its makeover with even more exciting features.

BANEX

To test much of our infrastructure, we employed a huge VMWare infrastructure. BANEX is our vSphere cluster with several dozen VMs of varying operating systems and architectures. We use this as a firing range to test our payloads and tools.

Custom Malware

These are the custom pieces of software I’ve written over the years to stay on your boxes and give you a seriously hard challenge.

GOOBY

In order to seriously decrease our coding effort, Dan, Vyrus, and myself converted almost all of our malware tools and techniques into shared libraries which could be included on a per function basis and compiled into our implants.

GOOBY is the base library that all of these extend from. Most of the tools below, are actually library functions implemented in GOOBY. This allows implants to share modules depending on what purpose we want the implant to serve.

For individual binaries, they’ll have their own build scripts that will (usually) statically compile the included GOOBY modules into the final implant.

GENESIS

Genesis is the successor to the much alluded to [REDACTED] tool which acted as a all-in-one persistence script. One common technique we use is “bundling” of payloads so that when exploitation occurs, you can run a single payload and get multiple pieces of persistence deployed. [REDACTED] was the product of one of the darkest of wizards on our Red Team. It evolved over the years to support both *nix and Windows.

This year, Dan, Lucas, Vyrus, and myself decided to test some new, unique features and created GENESIS. GENESIS is a small program that can be dynamically built and obfuscated with an arbitrary number of payloads included in it. This allowed us to abstract much of our local persistence techniques that originally lived in the [REDACTED] code into GOOBY, for easy use by any of our payloads.

We spent a lot of time working out obfuscation and anti-RE techniques into GENESIS. This is extremely important because now if a GENESIS binary is intercepted, significant effort will be needed in order to determine the other payload it encompasses. We’re lagging behind on some platforms in this area, but by next year we expect GENESIS and its supported obfuscation to be broadly supported.

Huge huge props to original developer (he knows who he is) and all the contributors to this effort over the years.

AMBER

AMBER is a custom shellcodeexec I wrote in San Antonio this year. I wrote my own for two reasons:

- Many shellcodeexec implementations are easily detected by AV (we don’t want that!)

- AMBER is implemented as a GOOBY module for easy use in other implants

AMBER also supports in memory process injection and lets us migrate payloads into other processes with only a few lines of code. Extremely handy.

EXODUS

EXODUS is a library that builds on top of AMBER to perform similar functionality as GENESIS, but with shellcode instead of persistence.

In essence, EXODUS allows the operator to “bundle” an arbitrary group of shellcode payloads and create a loader which will execute the shellcode, either spawning a new process or injecting into an existing one (operator configurable).

This gives us the same “spray” capability that GENESIS gives, but with raw shellcode.

QBERT

You can think of QBERT as somewhere between a rootkit and the void of space. It’s extremely difficult to hunt down, it leaves very little signatures, and QBERTs sole purpose is to keep other GOOBY based malware persistent on the infected host. It does this through a variety of techniques that span OS functions, but suffice to say, it’s one of the dirtiest tools in the box. As of this blog post, it still is almost entirely undetectable by any major IDS/AV/Audit vendor.

GEMINI

GEMINI is an implant I’ve slowly developed over the last few years to keep me in systems when most other persistence techniques have been discovered. It doesn’t have a ton of functionality, but it’s small and extremely portable – it cross compiles to basically any operating system.

GENESIS allows access to the infected hosts filesystem and command execution by the operator and is also the first Implant that used the GRID network for its C2 callbacks. GEMINI will assess network conditions on the infected host to determine what time of covert channel to use for a call back.

The callbacks are asynchronous, so as an operator, you have to consider lag time between when you issue a command and when GEMINI comes home, but it’s been a reliable implant. A huge thank you to Vyrus for putting in countless hours improving its capabilities this year.

Vyrus and I also wrote a SPEC for generic C2 communication. This new protocol will be the basis for many different implants moving forward and the foundation for extending C2 interaction to OG and TRAPHOUSE.

WONKA

Dan talks often about his troll payloads, but I have a few of my own. WONKA historically has been a text file of special *nix local persistence techniques. Linux systems at CCDC events have a tendency to be very specific in their build and configuration, so I’d just keep the text file open and pick and choose what little one liners to deploy at competition time.

This year, I started the process of converting WONKA into a GOOBY module and adding meta wrappers around it. Some of the functions you might have seen if you’ve encountered WONKA:

- “BASHLOTTO” – A bash trick that will lottery a command entered into BASH for a potentially other command. (Usually 25% chance on the dice roll)

- “ANTIEDIT” – A custom wrapper around common editors that reverts changes on files you change.

There’s several more, but that gives you a few basic ideas of how WONKA causes you headaches during the competition.

I’m a big believer in WONKA because I think troubleshooting and performing root cause analysis is actually incredibly difficult. WONKA’s goal is to challenge you to think critically about how your systems may or may not be behaving and to validate assumptions about your tools.

SSHELLY

SSHELLY is a persistence technique that attacks the OpenSSH server. SSHELLY has several options for nefarious behavior, two of which are:

- “GODKEY” – Allow access for any user with a specific SSH key

- “LOUDPACK” – Saves password credentials for users in memory. When a specific backdoor user connects, it dumps the credentials and disconnects the client.

SSHELLY still has a few bugs here and there, but it’s maturing nicely and is an amazing complement to my toolbox. By deploying SSHELLY, many linux hosts which must maintain a running SSH service are now able to be persisted in a difficult to detect manner.

ALFRED

Every year, there are things I want to do in a recurring manner on your systems. While cron and Scheduled Tasks are reliable, they’re easily detected. ALFRED is my own lightweight “cron” runner. I can either compile tasks into the ALFRED binary, or leave a config file on disk for ALFRED to search and find.

While it’s not functional yet, I’m experimenting with memory cradles that will allow other implants to schedule tasks simply by writing to a shared memory segment.

HALITOSIS

I’ve been playing with audio/video drivers at CCDC competitions for a few years now. The obscure operating systems and the lack of packages generally makes intereacting with peripherials challenging. HALITOSIS is my GOOBY module that for listening to microphones on your system. I’m still in the process of porting much of my old code into the new GOOBY module, but it’s a great tool for listening in on whats going on in the blue team rooms.

TATTLER

Network visibility is something we spend a lot of time working on during the competition. Teams often put up firewalls and NAT their internal networks. This makes scanning from our external position difficult. TATTLER is a GOOBY module designed to perform adhoc port scanning and service discovery of your networks FROM THE INSIDE. It’s not as feature rich as NMap, but it’s extremely fast, portable, and configurable based on the operator’s desire. Any GOOBY based implant now has access to theTATTLER library to perform recon on otherwise unknown network segments.

MOTHRA

To keep it short and sweet (and not reveal too much), MOTHRA is fly paper for system credentials. At less than 1k, it’s definitely my smallest payload, but it’s one of the most deadly. It was only in use for a few hours at the end of day 2 NCCDC, and it managed to gather over 500 UNIQUE credentials.

Tony, you know you da man. This is going to be a very fun tool for years to come.

Conclusion

So there you have it. On top of the industry tools (Metasploit, Cobalt Strike, Empire, etc.), we have to bring custom tools. If we didn’t develop tools like these, you wouldn’t be facing a realistic simulation of a world class adversary. (Looking at you CIA *cough*VAULT7*cough*). As put best in The Art Of War:

So it is said that if you know your enemies and know yourself, you will not be put at risk even in a hundred battles.

If you only know yourself, but not your opponent, you may win or may lose.

If you know neither yourself nor your enemy, you will always endanger yourself.

I know it’s not a tell all, but hopefully this gives you some insights into the lengths we go to challenge you. This is exactly why CCDC is the best learning environment for information security.

I cannot, cannot, cannot stress enough how awesome it is to work with some of the world’s leading experts in information security. Between the pull requests and the mentorship, working on these systems with my fellow red teamers is some of the most fun I get to have all year. Shoutouts to several brethren who contributed to these tools:

- ahhh

- vyrus

- deadmaus_

- cmc

- emperorcow

- javuto

- tony

With that, I’ll leave you guys to prep for next year! Best of luck >:)